So, a recent post on Reddit highlighted a Mitch Hedberg joke.

“La Quinta” is Spanish for “next to Denny’s.”

Thinking about this, I realized we could use GIS to find the number of La Quintas that are next to Denny’s. Last night after the kids were asleep, I sat down with a beer (Sierra Nevada) and figured out how I could get the data and perform the calculation.

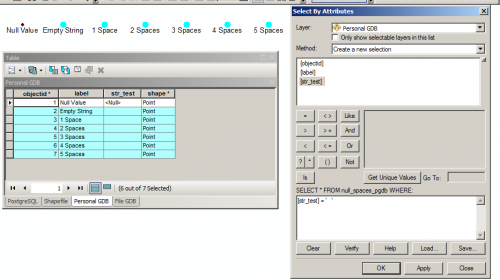

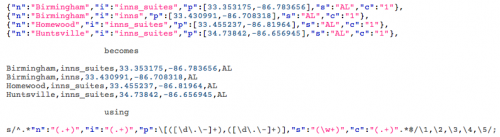

First, I visited La Quinta’s website and their interactive map of hotel locations. Using Firebug, I found “hotelMarkers.js” which contains the locations of the chain’s hotels in JSON. Using a regular expression, I converted the hotel data into CSV.

Sample of the data. Click for full size.

Next, I went to Denny’s website and their map. Denny’s uses Where2GetIt to provide their restaurant locations. They provide a web service to return an XML document containing the restaurants near the user’s location (via GeoIP) or by a specified address. Their web service also has a hard limit of 1,000 results returned per call. Again, I used Firebug to get the URL of the service, then changed the URL to search for locations near Washington, DC and Salt Lake City, Utah, with the result limit set to 1000 and the radius set to 10000 (miles, presumably). From this, I was able to get an “east” and “west” set of results for the whole country. These results were in XML, so I wrote a quick Python script to convert the XML to CSV.

#!/usr/bin/env python

import sys

from xml.dom.minidom import parse

dom = parse(sys.argv[1])

tags = ("uid", "address1", "address2", "city", "state", "country", "latitude", "longitude")

print ",".join(tags)

for poi in dom.getElementsByTagName("poi"):

values = []

for tag in tags:

if len( poi.getElementsByTagName(tag)[0].childNodes ) == 1:

values.append( poi.getElementsByTagName(tag)[0].firstChild.nodeValue )

else:

values.append("")

print ",".join( map(lambda x: '"'+str(x)+'"', values) )

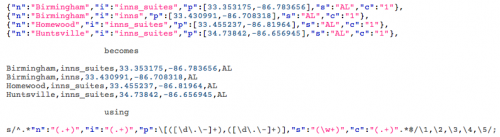

I then loaded the CSVs into PostgreSQL. First, I needed to remove the duplicates from my two Denny’s CSVs.

CREATE TABLE dennys2 (LIKE dennys);

INSERT INTO dennys2 SELECT DISTINCT * FROM dennys;

DROP TABLE dennys;

ALTER TABLE dennys2 RENAME TO dennys;

Then I added a Geometry column to both tables.

SELECT AddGeometryColumn('laquinta', 'shape', 4326, 'POINT', 2);

UPDATE laquinta SET shape = ST_SetSRID(ST_MakePoint(longitude, latitude),4326);

SELECT AddGeometryColumn('dennys', 'shape', 4326, 'POINT', 2);

UPDATE dennys SET shape = ST_SetSRID(ST_MakePoint(longitude, latitude),4326);

From there, finding all of the La Quinta hotels that live up to their name quite easy.

SELECT d.city, d.state, d.shape <-> l.shape as distance,

ST_MakeLine(d.shape, l.shape) as shape

FROM dennys d, laquinta l

WHERE (d.shape <-> l.shape) < 0.001 -- 'bout 100m

ORDER BY 3;

Here are the 29 cities that are home to La Quinta – Denny’s combos:

- Mobile, AL

- Phoenix, AZ

- Bakersfield, Irvine, & Tulare, CA

- Golden, CO

- Orlando & Pensacola, FL

- Augusta & Savannah, GA

- Lenexa, KS

- Metairie, LA

- Amarillo, Austin, Brenham, College Station, Corpus Christi, Dallas, El Paso (2!), Galveston, Irving, Killeen, Laredo, Lubbock, McAllen, San Antonio, & The Woodlands, TX.

You might have noticed El Paso, Texas, has two La Quinta – Denny’s combos. Both happen to also be on the same street, just a few miles apart.

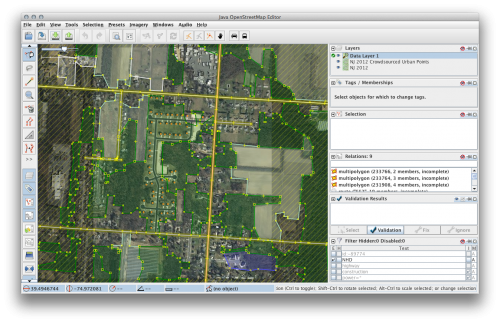

Gateway Boulevard to the West…

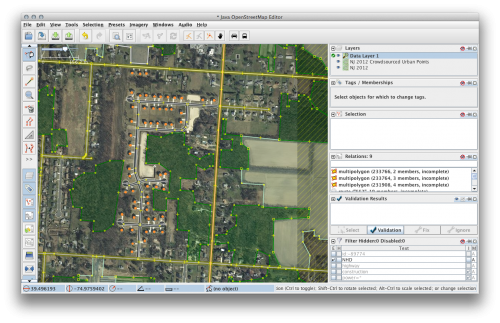

and further east…

I love that the second one even shares a post for both of their highway-scale signage.

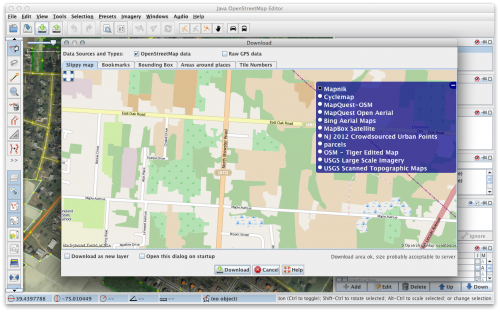

So out of the 833 La Quintas and 1,675 Denny’s, there are 29 that are very close (if not adjacent) to one another. So, only 3.4% of the La Quintas out there live up to Mitch Hedberg’s expectations.

GIS: coming up with solutions for the problems no one asked!

Update: Chris in the comments made a good point about projection. So here’s the data reprojected into US National Atlas Equal Area and then limited to 150 meters distance between points.

SELECT d.city, d.state, ST_Transform(d.shape,2163) <-> ST_Transform(l.shape,2163) as distance

FROM dennys d, laquinta l

WHERE (ST_Transform(d.shape,2163) <-> ST_Transform(l.shape,2163)) < 150

ORDER BY 3;

This yields 49 pairs (or 5.8% of all La Quintas):

- Alabama: Huntsville, Huntsville, Mobile

- Arizona: Phoenix, Tempe, Tucson

- California: Bakersfield, Bakersfield, Irvine, South San Francisco, Tulare

- Colorado: Golden

- Florida: Cocoa Beach, Orlando, Pensacola, St Petersburg

- Georgia: Augusta, Savannah

- Illinois: Schaumburg

- Indiana: Greenwood

- Kansas: Lenexa

- Louisiana: Metairie

- New Mexico: Albuquerque

- Oregon: Salem

- Texas: Amarillo, Austin, Brenham, College Station, Corpus Christi, Dallas, Dallas, El Paso, El Paso, Galveston, Irving, Killeen, Laredo, Live Oak, Lubbock, McAllen, San Antonio, San Antonio, San Antonio, Victoria, The Woodlands

- Utah: Midvale

- Virginia: Virginia Beach

- Washington: Auburn, Seatac

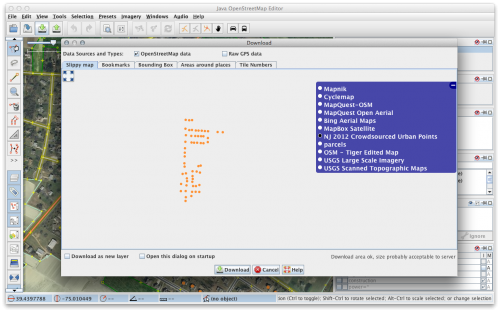

This modification to the query introduced a new oddity: Huntsville, AL has a Denny’s that is between two La Quintas, which is why it’s on the revised list twice.

A Denny’s adjacent to one La Quinta and about 400 feet from another…

Update: I uploaded a dump of the data to Github, if you want to explore the data on your own.