The New Jersey Land Change Viewer, the online component of the Changing Landscapes research project required the generation of approximately one million map tiles. These tiles needed to be served quickly – the online viewer is meant to make the findings of the project and the ramifications of New Jersey’s urbanization patterns readily apparent to the general public. Long wait times do not help get your point across, so we used Amazon Web Services to store and distribute the map tiles.

The New Jersey Land Change Viewer, the online component of the Changing Landscapes research project required the generation of approximately one million map tiles. These tiles needed to be served quickly – the online viewer is meant to make the findings of the project and the ramifications of New Jersey’s urbanization patterns readily apparent to the general public. Long wait times do not help get your point across, so we used Amazon Web Services to store and distribute the map tiles.

About a month before we started the project, we purchased a license for a VMware server through the Rowan Network and System Services. gis.rowan.edu was born, hosting a basic website on our GIS program and services we provide, as well as an instance of GeoServer for rendering web maps. I was excited to have the server, as I had only done minimal tinkering with GeoServer in the past. New Jersey State Atlas, my web mapping project site, uses MapServer, as I was able to kludge together enough of the libraries in my user space on Dreamhost shared hosting. I was satisfied with the maps produced by MapServer, however its map configuration files are not the most user-friendly format out there. As I want to eventually introduce server-side GIS and web mapping to the Rowan GIS students, I installed GeoServer on our server. I believe GeoServer is better suited to that task because of the spectacular user interface. I also found it pretty straightforward and useful to quickly manage and serve several data sets.

I also employed TileCache to store rendered tiles for our interactive maps. TileCache is also deployed on NJ State Atlas; without it I don’t think that site would be practical to keep running. Rendering data from disk for each request is time consuming and unnecessary if the data is not frequently updated. The need for efficiency is even greater in a shared hosting environment. While developing the animated maps for this project, I found that it would not have been possible – given our resources and timeframe – without TileCache.

After the Urban Change map was loaded into GeoServer and TileCache configured, we noticed immediately that even after initial rendering, the map took an incredibly long amount of time to load. We performed a small non-scientific stress test using 4 on-campus computers. The load times for the map stretched into the 1 to 2 minute range. Anything beyond a few seconds was unacceptable. The wait was not only long, but because the animation was timed to start after the tiles had loaded, it seemed like the page was broken. We were happy with the map once it loaded, but we knew we couldn’t move forward unless we figured out a way to serve the tiles faster.

I set out to tackle two issues: wrapping my head around some content-delivery system and optimizing the GIS server to reduce latency as much as possible. My initial finding upon consulting the oracle regarding a CDN was a PHP script called PHPGeoTiles, which claims to be able to load map tiles into Google App Engine. This was a non-starter for me, as the developer hosted the script on RapidShare (which I previously thought was only used for warez and porn) instead of on his own site, and the script had been deleted. I googled some more and found a discussion of cloud-based tilecaching where fellow New Jerseyean Brian Flood mentioned the release of Arc2Earth Cloud. While exciting and capable of doing what I needed, I was committed to rendering and serving the tiles off of the Linux GIS server – we were under a strict timeframe and I couldn’t move everything back to my unreliable Windows desktop. The latest versions of TileCache support storing data in an Amazon S3 bucket, so I started to do some research on Amazon Web Services. I felt that this could be the solution, so in a gesture that proved John Hasse’s faith in me, he handed me his credit card to sign up for AWS.

After some initial testing, AWS proved itself to be the right choice. However, I was still dismayed by a back-of-the-napkin calculation: we planned on 24 map layers/animation frames across the 6 maps of the report. The maps were restricted to Google zoom levels 8 through 15, requiring over 60,000 tiles for each layer to cover all of New Jersey. At the time, the GIS server was rendering individual tiles at a rate of just under one tile per second. We had ten days until the release date and approximately 1.5 million tiles to create. By that measure, it would take seventeen days rendering the tiles non-stop to be ready for the release. Something had to be done.

Considering my previous “production” experience with tile rendering was in a shared hosting environment, I had considered “working” to be a major victory. Simply working wouldn’t fly in this case. The difference now is that I had root access to this machine and could configure it appropriately. Before now, I had not worked with Tomcat or JSP and I have no real background in Java, so I was incredibly grateful that the GeoServer documentation has a great writeup on optimizing GeoServer and its container for a production environment. I installed native JAI and tweaked Tomcat to start with the -server flag and a larger heap. TileCache was next and the most apparent improvement was found by moving to mod_python for the maps still running off of gis.rowan.edu. Even though we still went with delivering the tiles via Amazon CloudFront, TileCache under mod_python reduced the load time from approximately 1:30 down to about 5 seconds.

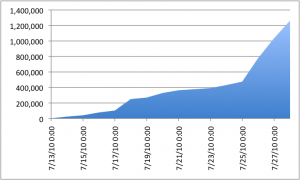

Number of tiles in our S3 bucket leading up to the report release date.

What saved the day was TileCache’s ability to render metatiles, where instead of requesting 256×256 tiles, TileCache requests a 2560×2560 WMS image, which it then splits and stores as individual tiles. The difference can be clearly seen in the graph at right. We now had the spigot all the way open and were filling the S3 bucket like mad.

I still ran into some issues resulting in GeoServer seizing up after several hours worth of tile caching. Seeding the tilecache would fail because GeoServer would return a 500 error. What caused the most head scratching is that most of the time, when pasting the same request that caused TileCache to fail into my web browser I would receive a perfectly-normal tile as if nothing was wrong. (Occasionally Tomcat would hang, but that was before I finished settled on the right mix of startup flags.) Due to my lack of Java knowledge, I wasn’t able to make much sense out of the errors returned by GeoServer/Tomcat, so a workaround was developed in TileCache. I modified the Client.py module to try the failed request a second time after 5 seconds, dying if the second-chance was also met with failure. It seems that GeoServer needs a break once in awhile, as that seemed to solve all my intermittent crashing issue. I could now return to anxiously waiting for tilecache_seed.py to complete so I could start loading the next map into the cache.

Tomorrow: Release Day

hi John

excellent article and congrats on the great website!

thanks for mentioning Arc2Earth in the mix. I just wanted to clarify a couple points. first, you don’t need a Cloud instance for cutting tiles. You can do it directly from ArcMap (or the command line) and the resulting tiles are copied locally, a web server or to Amazon S3.

also, we use the metatile tile concept as well when cutting. Tile chunks are 5×5 tiles in size, this dramatically helps in cutting duration as well as with labeling anomalies across tile borders

finally, you can use the new Change Detection Level to really cut down on tile creation the next time you run your data. Essentially, it will look into an existing cache and run a binary comparison on potential tiles and existing ones, if they are identical it will eliminate that entire tile extent in the underlying levels. For instance, if you have a 100 changes to your Land Use data spread all over the state, it will find those areas in the cache and only update those extents at the lower levels (which in large caches, can produce an order of magnitude in time savings)

again, thanks for the mention!

cheers

brian